In today’s open-source software stack you can find many indispensable dependencies in the form of software libraries. They are logging frameworks, testing frameworks, HTTP libraries, or code style checkers. But it doesn’t happen often that a new library emerges which changes the way we think about computing.

One of such libraries in the data processing and data science space is Apache Arrow. Arrow is used by open-source projects like Apache Parquet, Apache Spark, pandas, and many commercial or closed-source services. It provides the following functionality:

- In-memory computing

- A standardized columnar storage format

- An IPC and RPC framework for data exchange between processes and nodes respectively

Why is this such a big deal?

In-Memory Computing

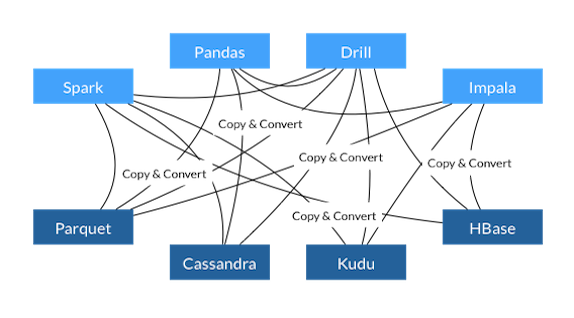

Let’s look at how things worked before Arrow existed:

We can see that in order for Spark to read data from a Parquet file, we needed to read and deserialize the data in the Parquet format. This requires us to make a full copy of the data by loading it into memory. First, we read the data into a memory buffer, then we use Parquet’s conversion methods to turn the data, e.g. a String or a number, into the representation of our programming language. This is necessary because Parquet represents a number differently from how the Python programming language represents it.

This is a pretty big deal for performance for a number of reasons:

- We are copying the data and running conversion steps on it. The data is in a different format, we need to read all of it and convert it before doing any computation with the data.

- The data we are loading has to fit into memory. Do you only have 8GB of RAM and your data is 10GB? You are out of luck!

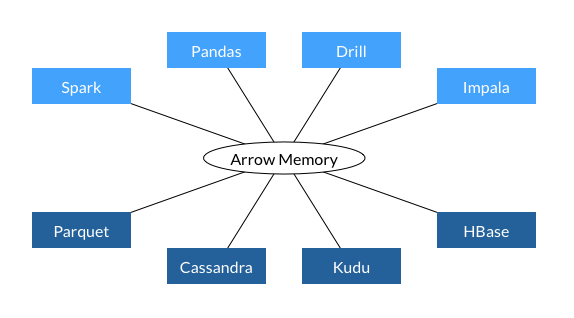

Now let’s look at how Apache Arrow improves this:

Instead of copying and converting the data, Arrow understands how to read and operate on the data directly. For this to work, the Arrow community defined a new file format alongside with operations which works directly on the serialized data. This data format can be read directly from disk without the need to load it into memory and convert / deserialize the data. Of course, parts of the data is still going to be loaded into RAM but your data does not have to fit into memory. Arrow uses memory-mapping of its files to load only as much data into memory as necessary and possible.

Standardized Column Storage Format

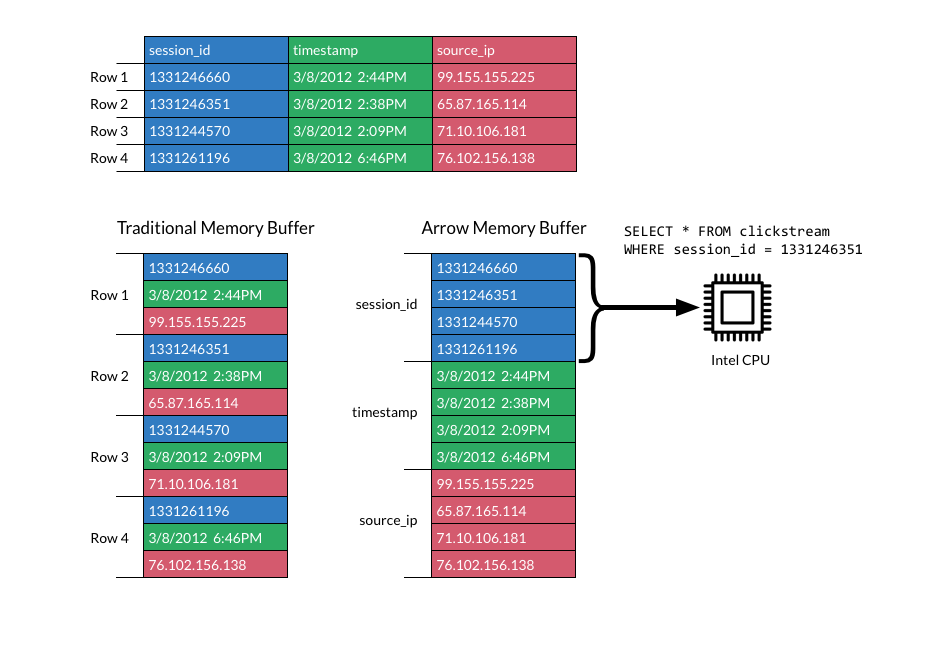

The heart of Apache Arrow is its columnar data format. What does columnar data mean? In traditional file formats or databases, data is stored row-wise. For example, if we had a record with the fields product, quantity, and price:

| Product | Quantity | Price |

|---|---|---|

| Banana | 3 | 1.8 |

| Apple | 5 | 2.5 |

In row-wise storage the data would be stored on disk row by row. That makes a lot of sense. However, if you quickly want to sum up the total price of all the items, you would have to read all the records and extract the price column from them. Wouldn’t it be better if the data already came in a format that allowed to read the columns efficiently?

Enter columnar storage.

For columnar storage we arrange the data in columnar format. In our example this would look like this:

| Product | Banana | Apple |

|---|---|---|

| Quantity | 3 | 5 |

| Price | 1.8 | 2.5 |

If we store the data like this, we have all the column data in one place and can iterate over it efficiently. Not only is this more efficient in terms of extracting values but we can also take advantage of modern CPU architecture which applies the same operation (e.g. summation) on a continuous data segment in memory. This is also referred to as Single Instruction Multiple Data (SIMD). It is very efficient due to caching and pipelining at the processor level.

How much faster is this? The simpler answer: a lot faster! Here are some performance numbers from Dremio:

- Parquet and C++: Reading data into Parquet from C++ at up to 4GB/s

- Pandas: Reading into pandas up to 10GB/s

Clearly, the speedup depends on the application but there is no doubt that, besides its functional advantages, Arrow can provide a tremendous performance boost, to the point that it enables new applications which were not feasible before.

Supported languages

The following languages are supported by Apache Arrow:

- C++

- C#

- Go

- Java

- JavaScript

- Rust

- Python (through the C++ library)

- Ruby (through the C++ library)

- R (through the C++ library)

- MATLAB (through the C++ library).

Not just a more efficient file format

IPC (inter-process communication)

It is important to understand that Apache Arrow is not merely an efficient file format. The Arrow library also provides interfaces for communicating across processes or nodes. That means that processes, e.g. a Python and a Java process, can efficiently exchange data without copying it locally. Nodes in a computer network also benefit from this, while the data has to be transferred over the network, we only need to transfer the relevant columns from the data. In both cases, we don’t have to deserialize data because Arrow understands how to operate directly on the data.

According to Dremio, the following speedup was achieved in PySpark:

- IBM measured a 53x speedup in data processing by Python and Spark after adding support for Arrow in PySpark

RPC (remote procedure call)

Within arrow there is a project called Flight which allows to easily build arrow-based data endpoints and interchange data between them. Flight is optimized in terms of parallel data access. It is possible to receive data from multiple endpoints in parallel and request data from a new endpoint while still reading from an endpoint. This highly parallel way of interacting with the services can provide a performance boost for network transfers.

It was observed by Dremio in their Arrow Flight connector that you could achieve a 20-50x better performance than ODBC over a TCP connection.

What’s next? Building a query engine on top of Arrow

As of now, to use Arrow you need to know how Arrow works and how the data is stored. Many projects such as pandas have taken advantage of that. However, the missing piece is a query engine on top of Arrow capabilities which would allow users to easily query and process data stored in the Arrow format. The Arrow community is working on that.

Post Mortem

There is/was a lively discussion on Twitter which brought up DuckDB and vaex as query engines built on top of Arrow. Also, it was mentioned that DataFusion, a Rust-based query engine, has been donated to Arrow.

Images taken from the Apache Arrow site.