Debugging is the process of identifying the root cause of an unexpected behavior of a software program. In software development, bugs are inevitable — No matter how good programmers are. Distributed systems are no exception in this regard, but they are often more difficult to debug.

Let’s take a look at why this is the case.

The Hierarchy of Complexity for Debugging

There is a hierarchy of complexity when it comes to debugging software:

Level 1: Nonconcurrency

The program to debug is strictly nonconcurrent, meaning there is a single program path which is executed.

These are single-threaded programs, i.e. programs running on a single machine, using only a single CPU core.

Level 2: Concurrency

The program utilizes concurrency in its execution paths. At any point in time there are multiple paths in the program which are executed without a guaranteed order.

Typical these programs use one of the following:

Note that concurrency does not mean that multiple programs paths execute at the same time. Rather, it means that the order in which the program paths execute cannot be guaranteed. If the execution paths have a dependency on each other, e.g. one thread needs to hand over a partial result to another thread, correct execution can only be ensured if the two execution paths can execute serially. Serial execution is defined to yield the same result, no matter of the order in which the execution paths run.

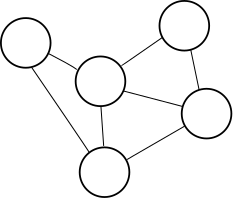

Level 3: Distribution

The program runs in a distributed fashion, meaning it’s composed of multiple independent but interconnected computing nodes. The nodes exchange messages to coordinate the distributed computing. Regardless of whether the individual nodes use concurrency, the overall distributed application is automatically concurrent because each node has its own execution path.

Why debugging distributed systems is hard

Debugging distributed systems is hard because we operate on Level 3 which includes both the concurrency as well as the distributed execution as a source of errors. While it may be trivial to read the code to figure out a bug on Level 1, it can become challenging to figure out what different threads on Level 2 are doing. On Level 3 we have another dimension for bugs arising from the message exchange of nodes. It’s often non-trivial to capture the state in a distributed system, as we can’t attach a debugger to all nodes at the same time. Likewise, integration tests are harder to write because they require a test scenario as close to running the actual distributed system as possible.

To understand this better, let’s look at common debugging techniques and how they relate to debugging distributed systems:

Common Debugging Techniques

Let’s look at some of the common debugging techniques which can be applied at Level 1 and Level 2.

Understanding error messages

As basic as it sounds, understanding the error message or related messages is often enough to fix the cause of a bug. Usually, that requires good knowledge of the internals of the software which reports the error. However, the error message may not be related to the actual cause of the error and thereby distract from the real problem. In any case, the error message is usually the starting point of the investigation.

In a distributed system, obtaining an error message is not always trivial because the actual cause of the error might occur and get logged on a different machine. Errors messages are not guaranteed to be propagated back to the client which initiated the request. Understanding the message flow between the nodes of a distributed systems, as well as having an infrastructure to obtain metrics and logs from all nodes is crucial here.

Reading the code

It may seem counter-intuitive, but going back to the code and verifying its desired behavior can be the most effective way to identify and fix bugs.

In a distributed system, the code paths are often spread across multiple modules which execute across many machines. The message exchange may not always be defined clearly and this makes debugging hard. Also, the error could depend on a non-obvious interleaving of messages.

If a bug cannot be properly identified by just reading the code, more information needs to be gathered by using one or more of the following methods.

Using code checkers

Code checkers such as valgrind (C, C++), Findbugs / Spotbugs (Java) use a set of rules to detect programming mistakes which can lead to bugs. These can run statically at runtime (e.g. Findbugs / Spotbugs) or dynamically at runtime (e.g. valgrind). It makes sense to inspect the errors or warnings on a regular basis.

While code checkers are good to find edge cases or memory leaks, they do not cover the whole spectrum of possible bugs. Also, they often just give a hint about an error and more debugging has to be performed afterwards.

Adding tests

By adding tests for the broken functionality, we can ensure that our assumptions gathered from reading the code hold true. Tests also allow us to check for edge cases in the program execution which are easily missed if checked by hand. Tests come in various forms:

- unit tests

- integration tests

- end-to-end tests

The downside of using testing for debugging is that the test creation is usually biased. Programmers tend to only test for the cases they can imagine. Non-trivial bugs are often hard to find using this method. However, tests are a great way to ensure regressions do not occur once a bug has been identified and fixed.

In distributed systems, testing the actual distributed setup including simulation of real-world failure scenarios, such as machine failures or network partitions, is hard to do. Also developing the proper testing utilities to built a distributed test environment locally, can be challenging.

Bisecting

In debugging, bisecting is the process of running a binary search on the commit log of the version control system such as Git. For every version found in the commit log, a new version of the software is built. Then, a test is run which determines whether this version is healthy or not. One starts by declaring the latest healthy (correctly working, also called good) version and a known unhealthy (buggy, also called bad) version. Using binary search, we then search between the healthy and the unhealthy version. A test is run to determine whether a version is healthy. Eventually, this will find the first commit which was unhealthy. The change set can then reveal information about what introduced the bug.

On a single node, this process is usually efficient if the test runs fast. In a distributed system, this process can become very time-intense due to the need to deploy a new version every time. Also, this only works efficiently if all tasks can be automated.

Logging

logs

If logs are available, they can give an idea of what happened before the bug occurred. Logs are usually easily accessible on the same machine.

In a distributed system, logs are spread across multiple machines. Getting access to the right machine and searching the logs becomes much more involved.

If not enough output is available, we may have to generate some output:

printf debugging

printf is a function from the C standard library. In Printf debugging we are outputting information about the state of the program to a console, file or any other output method. This allows us to understand the behavior of the program better.

The drawback of this method is that it requires us to alter the program itself. In concurrent programs this can lead to a bug not showing up anymore, which does not make it applicable in all scenarios.

In distributed system, we face the difficulty of deployment costs and time. Deploying a new version of the software might take a considerate amount of time.

Tracing

Tracing tools allow us to understand the instruction flow of the program execution. This can involve the state changes in the program or system calls to the underlying operating systems (e.g. strace).

In a distributed system, the tracing needs to be aware of the multiple instances of the software. Otherwise, the trace on a single node may not reveal the necessary information for debugging the problem.

Using a debugger

A debugger is a separate program which attaches to the program we want to debug. Debuggers can gather information about the state of the program at any point in time. They also allow to set breakpoints at specific instructions or, if the source code is available, at lines of the source code.

Debuggers are very powerful. In the case of concurrent programs (Level 2) using multiple threads, we can halt the execution of all threads and inspect their state.

In distributed systems, there is the hurdle of using the debugger on the correct node. Halting the execution of the program may cause timeouts on other nodes and provoke a unwanted failure scenario in the distributed system.

Profiling

In profiling, we can sample CPU, memory, or disk usage. For statically compiled languages such as C/C++, we usually need to recompile to enable profiling information. For dynamically compiled languages with a runtime environment such as Java with its JVM, we can use a profiler such as JProfiler which gathers the information from the running program without having to recompile.

A profiler can be useful to detect memory leaks or performance-related bugs. Like the debugger, profiling will be performed per-node. This information has to be inspected individually, or we can collect and merge this information to be able to get an overview of all the nodes in a distributed system.

Debugging Techniques for Distributed Systems

We have already learned that the overall complexity in debugging distributed systems is higher. Here are a few techniques which, additionally to the already described debugging techniques, can help with debugging distributed systems:

Contracts and documentation

Without an understanding of the communication between nodes in a distributed system, it is often impossible to debug. Specifying the message protocol between nodes in a distributed systems, provides a reference for debugging illegal message exchange. Modern distributed system architecture like the Actor model have made documenting message exchange easier, yet it is still up to developers to enforce and document contracts.

Defensive programming

Whenever contracts or assumptions the code makes are violated, we should print out a warning or fail with an appropriate error message. This provides feedback to the developers and users early on in the software cycle and allows to fix bugs before they are shipped to the customer, and before they become hard to fix.

Better tests

Instrumentation for integration tests

Code should be easy to test. If test utilities allow for an easy setup of local versions of the distributed systems, more tests will be written. This can be achieved by parameterizing components to allow them to run locally instead of fully distributed.

End-to-end tests

End-to-end tests are a great way to see if the common code paths work correctly across all nodes. Compared to unit or integration tests, these are more expensive in terms of setup and computing resources but they also provide the most realistic test scenario. The downside is that end-to-end tests are not good at testing edge cases which can be more easily tested using unit or integration tests.

Remote Debugging

In remote debugging, we connect a locally running debugger to a remote node of the distributed system. This allows us to use the same features as if we were debugging a locally running program. Key problem here is to identify which node to connect to and to avoid network timeouts while debugging parts of the distributed system.

Distributed logging

Log collection and visualization

Log collection tools (e.g. Prometheus or Logstash) collect and transform log files, then insert them into a data store (e.g. Prometheus or Elasticsearch). Visualization tools (e.g. Prometheus or Kibana) allow you to query and visualize the data across all nodes of a distributed system or selectively for certain nodes. This can be very helpful when looking for errors messages or certain outputs.

Metrics

In addition to logs, distributed systems usually emit metrics which can be an indicator for what is happening during execution. Apart from application specific metrics, standard metrics like CPU usage, memory usage, and network saturation are typically available.

Distributed tracing

In distributed tracing, we extend the tracing for a single node to a distributed system. For example, we could store all messages exchanged such that we can replay the messages to reproduce the bug. Note, that this way of replaying may not always work because the state changes within the nodes might also be important for reproducing the bug. This is where deterministic replay becomes interesting.

Distributed deterministic simulation and replay

It’s a typical scenario that we see a bug occur, even during testing, but we can’t reproduce the bug by running the test again. If only there was a way to deterministically replay the test run? Turns out, this is possible by creating a simulation layer which abstracts the underlying hardware and the network interfaces to allow for an exact replay of all the state changes and messages exchanged between the nodes. To my best knowledge, this was pioneered by FoundationDB.

Model checking

By building a model of the concurrent and distributed aspects of the systems, we can formally specify important aspects of it. Further, we can then run model checks to see if the system is guaranteed to run correctly, according to our model. One language which is used by Amazon and Microsoft is TLA+.

Conclusion

Debugging distributed systems is hard, but not impossible. With the right tools and practices it is a reasonable endeavour. Did I miss something here? Let me know via email or feel free to comment on the Twitter thread.